Meet the Kinara Ara-2 AI processor, the leader in Edge AI acceleration. This powerhouse tackles the massive compute demands of Generative AI and transformer-based models with unmatched cost-effectiveness.

Built on the same flexible and efficient dataflow architecture as its predecessor, the Ara-1, the Ara-2 unleashes a beastly performance boost. Think tremendous increases in performance/Watt and performance/$ – this chip delivers unmatched efficiency and power for your AI needs.

Run complex Generative AI models at the edge, enabling real-time creativity and innovation

Fuel powerful transformer-based models, driving breakthroughs in natural language processing and machine translation.

Revolutionize edge applications from smart cities to healthcare diagnostics, all while minimizing cost and maximizing efficiency.

Ara-2 Processor

-

With its 8 Gen 2 neural cores and ability to access up to 16GB LPDDR4(X) memory, the Kinara Ara-2 powers edge servers and laptops with high performance, cost effective, and energy efficient inference. For additional operational confidence, Ara-2 supports secure boot and encrypted memory access.

KU-2 USB, KM-2 M.2

-

The Kinara Ara-2 accelerator modules enable high performance, power-efficient AI inference for Edge AI applications including Generative AI workloads such as Stable Diffusion and Llama-2.

-

Powered by the Kinara Ara-2, the KU-2 and KM-2 are ideal for running inference on models ranging from CNNs to complex vision transformers for a variety of applications in smart cities, retail, and gaming.

-

The KU-2 and KM-2 are available with flexible memory options – 2GB memory for conventional AI and 8GB for GenAI. Both of these modules can run multi-stream, multiple AI models simultaneously.

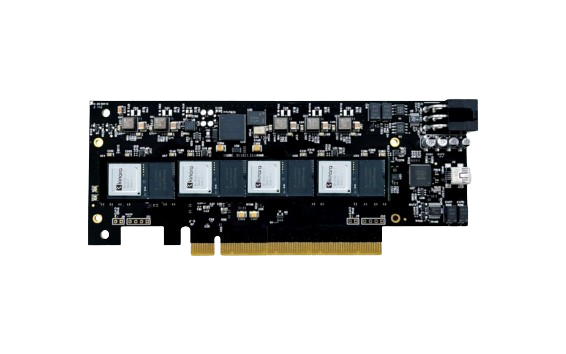

KP-2 PCIe Card

-

The Kinara Ara-2 PCIe AI accelerator card enables high performance, power-efficient AI inference for edge server applications including Generative AI workloads such as Stable Diffusion and LLMs.

-

The KP-2 is powered by four Ara-2s, rivaling the latest GPU-based inference cards in performance but at the fraction of power and cost.

-

The KP-2 is ideal for running heavy inference workloads with multiple modern CNNs, transformers, and Generative AI models in a variety of smart city, retail, gaming, and enterprise applications.

Download Product Brief

Download Product Brief